“Meadows’ Thinking in Systems, is a concise and crucial book offering insight for problem solving on scales ranging from the personal to the global. Edited by the Sustainability Institute’s Diana Wright, this essential primer brings systems thinking out of the realm of computers and equations and into the tangible world, showing readers how to develop the systems-thinking skills that thought leaders across the globe consider critical for 21st-century life.

In a world growing ever more complicated, crowded, and interdependent, Thinking in Systems helps readers avoid confusion and helplessness, the first step toward finding proactive and effective solutions.”

Goodreads

Thinking in Systems: A Primer by Donella Meadows is an absolutely wonderful introduction to the world of systems and systems thinking. It lays out the conceptual landscape of the entire discipline in language that is accessible to everyone, including non-technical people. It is a basic book which covers only the most basic principles of systems thinking and modelling.

The initial chapter lays out the world of systems, the second chapter explains some basic terminology of systems like stock, flows and feedback. This is used in the subsequent chapter to showcase some typical system structures in what Domella calls the “Systems Zoo”. It is fascinating to explore what remarkable behaviours can emerge even with very simple arrangements of water, taps, and bathtubs! I have written before about modelling complex technical systems like meshes of water hoses, but this book is the master class in that way of thinking about absolutely everything in the world.

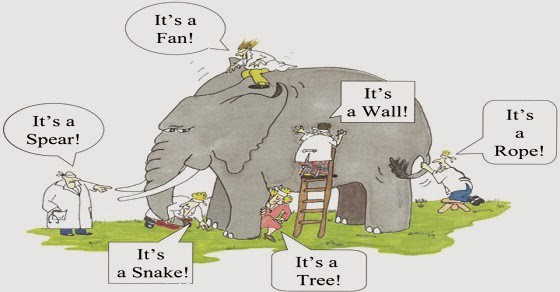

The next section covers the properties of complex systems in some details and explains how the principles for feedback loops and stocks lead to a rich diversity of nonlinear behaviour and how the human mind is singularly ill-equipped to comprehend all these forces acting on each other. The resultant “bounded rationality” of actors in systems is a major cause of all the confusion we see in the world around us.

Next come some particularly perverse but unfortunately common system behaviours the author called “System Traps”. These are followed by a list of leverage points which can be acted upon to alter system behaviour effectively, but we have to understand them right. The book closes with some heartfelt advice on being an effective systems thinker (or any kind of thinker at all)

More than teaching the technicalities, the author places a far greater importance on exposing the rich, complicated, and unexpected nature of real world systems through day-to-day examples. She repeatedly cautions against the idea of “control” in a world which defies control by its very nature. In many ways, this is a book about people and their attitudes towards the world. If you have ever heard the phrase “all technical problems are people problems” – this book will make it clear exactly why that is. It warns against false causalities, simplistic explanations, and impulsive action just as it exhorts empathy and humility in our intellectual endeavours. A lot of the theory about teams and organizations has emerged from the principles of systems thinking, and this book explains those principles very effectively.

If you want to understand the world we live in and the systems that exist all around us, Thinking in System : A Primer is an absolutely essential starting point.

The book contains nice summaries throughout chapters which sum up sections, and there is a comprehensive redux at the end. The following are some excerpts from the book that I personally felt were most impactful. Hope they convince you to get your own copy ASAP!

System and System Thinking Overview

- A system is an interconnected set of elements that is coherently organized in a way that achieves something.

- Systems must consist of three kinds of things: elements, interconnections, and a function or purpose.

- The system, to a large extent, causes its own behavior! An outside event may unleash that behavior, but the same outside event applied to a different system is likely to produce a different result.

- Once we see the relationship between structure and behavior, we can begin to understand how systems work, what makes them produce poor results, and how to shift them into better behavior patterns.

- Systems happen all at once. They are connected not just in one direction, but in many directions simultaneously.

- A system is more than the sum of its parts. It may exhibit adaptive, dynamic, goal-seeking, self-preserving, and sometimes evolutionary behavior.

- There is an integrity or wholeness about a system and an active set of mechanisms to maintain that integrity.

- Systems can be self-organizing, and often are self-repairing over at least some range of disruptions. They are resilient, and many of them are evolutionary.

- Many of the interconnections in systems operate through the flow of information. Information holds systems together and plays a great role in determining how they operate.

- A system’s function or purpose is not necessarily spoken, written, or expressed explicitly, except through the operation of the system. Purposes are deduced from behavior, not from rhetoric or stated goals.

- An important function of almost every system is to ensure its own perpetuation.

- One of the most frustrating aspects of systems is that the purposes of subunits may add up to an overall behavior that no one wants.

- Keeping sub-purposes and overall system purposes in harmony is an essential function of successful systems.

- Change in purpose changes a system profoundly, even if every element and interconnection remains the same.

Systems Terminology and Modelling

Stocks and Flows

- Stocks are the elements of the system that you can see, feel, count, or measure at any given time.

- Stocks change over time through the actions of a flow.

- All models, system diagrams, and descriptions, whether mental models or mathematical, are simplifications of the real world.

- A stock takes time to change, because flows take time to flow – Results are lagging indicators of processes

- Stocks act as delays or buffers or shock absorbers in systems.

- Changes in stocks set the pace of the dynamics of systems.

- Time lags that come from slowly changing stocks can cause problems in systems, but they also can be sources of stability.

- The presence of stocks allows inflows and outflows to be independent of each other and temporarily out of balance with each other.

- Stocks allow inflows and outflows to be decoupled and to be independent and temporarily out of balance with each other.

- Systems thinkers see the world as a collection of stocks along with the mechanisms for regulating the levels in the stocks by manipulating flows.

Feedback Loops

- A feedback loop is formed when changes in a stock affect the flows into or out of that same stock.

- If you see a behavior that persists over time, there is likely a mechanism creating that consistent behavior. That mechanism operates through a feedback loop.

- Balancing feedback loops are equilibrating or goal-seeking structures in systems and are both sources of stability and sources of resistance to change.

- Reinforcing loops are found wherever a system element has the ability to reproduce itself or to grow as a constant fraction of itself.

- Reinforcing feedback loops are self-enhancing, leading to exponential growth or to runaway collapses over time. They are found whenever a stock has the capacity to reinforce or reproduce itself.

- The time it takes for an exponentially growing stock to double in size, the “doubling time,” equals approximately 70 divided by the growth rate (expressed as a percentage).

- The information delivered by a feedback loop—even nonphysical feedback—can only affect future behavior; it can’t deliver a signal fast enough to correct behavior that drove the current feedback. Even non-physical information takes time to feedback into the system.

- Complex behaviors of systems often arise as the relative strengths of feedback loops shift, causing first one loop and then another to dominate behavior. A stock governed by linked reinforcing and balancing loops will grow exponentially if the reinforcing loop dominates the balancing one. It will die off if the balancing loop dominates the reinforcing one. It will level off if the two loops are of equal strength.

- In physical, exponentially growing systems, there must be at least one reinforcing loop driving the growth and at least one balancing loop constraining the growth, because no physical system can grow forever in a finite environment.

- Systems with similar feedback structures produce similar dynamic behaviors.

Delays in Systems

- Delays are pervasive in systems, and they are strong determinants of behavior. Changing the length of a delay may (or may not, depending on the type of delay and the relative lengths of other delays) make a large change in the behavior of a system.

- A delay in a balancing feedback loop makes a system likely to oscillate.

- The higher and faster you grow, the farther and faster you fall, when you’re building up a capital stock dependent on a nonrenewable resource.

Some examples of Systems

- Stock with Two Competing Balancing Loops—a Thermostat

- Stock with One Reinforcing Loop and One Balancing Loop—Population and Industrial Economy

- A System with Delays—Business Inventory

- A Renewable Stock Constrained by a Nonrenewable Stock—an Oil Economy

- Renewable Stock Constrained by a Renewable Stock—a Fishing Economy

- Nonrenewable resources are stock-limited. The entire stock is available at once, and can be extracted at any rate (limited mainly by extraction capital). But since the stock is not renewed, the faster the extraction rate, the shorter the lifetime of the resource.

- Renewable resources are flow-limited. They can support extraction or harvest indefinitely, but only at a finite flow rate equal to their regeneration rate. If they are extracted faster than they regenerate, they may eventually be driven below a critical threshold and become, for all practical purposes, nonrenewable.

Properties of Complex Systems

Resilience

- Resilience is a measure of a system’s ability to survive and persist within a variable environment. The opposite of resilience is brittleness or rigidity.

- There are always limits to resilience.

- Resilience is not the same thing as being static or constant over time. Resilient systems can be very dynamic.

- Systems need to be managed not only for productivity or stability, they also need to be managed for resilience—the ability to recover from perturbation, the ability to restore or repair themselves.

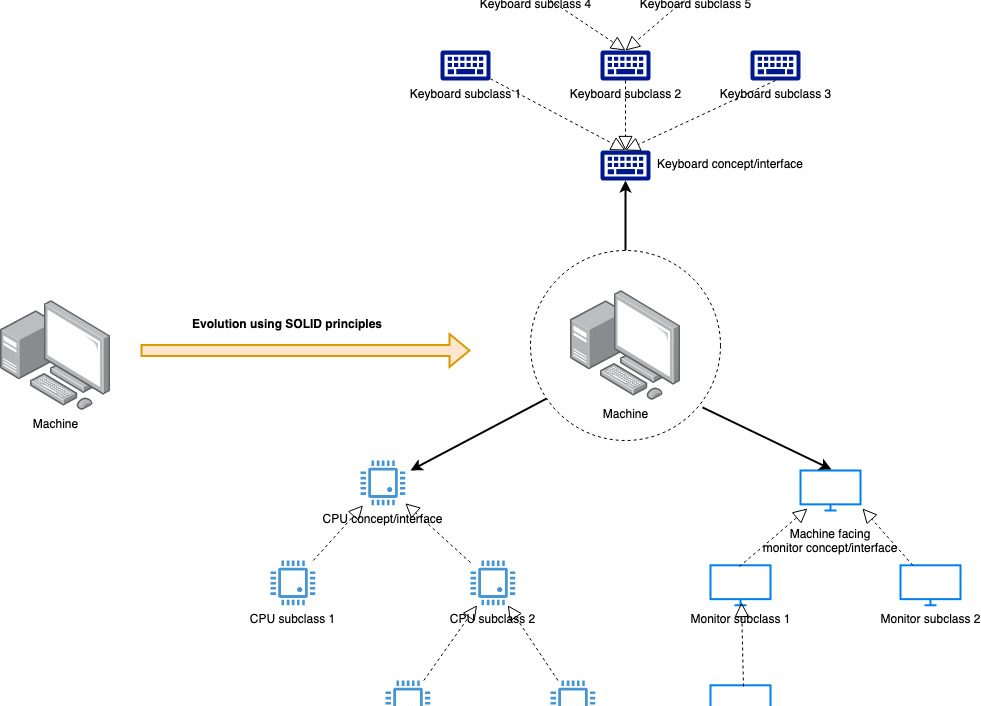

Self Organization

- Capacity of a system to make its own structure more complex is called self-organization

- Self-organization produces heterogeneity and unpredictability.

- It is likely to come up with whole new structures, whole new ways of doing things.

- It requires freedom and experimentation, and a certain amount of disorder.

- Conditions that encourage self-organization often can be scary for individuals and threatening to power structures.

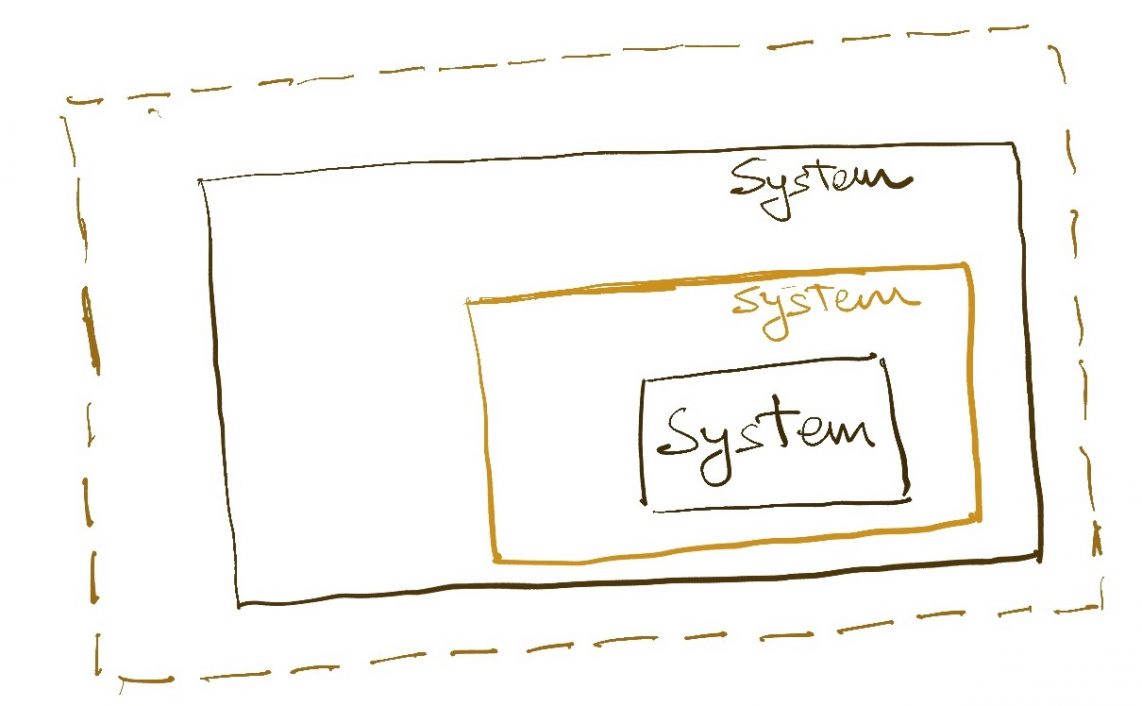

- If subsystems can largely take care of themselves, regulate themselves, maintain themselves, and yet serve the needs of the larger system, while the larger system coordinates and enhances the functioning of the subsystems, a stable, resilient, and efficient structure results.

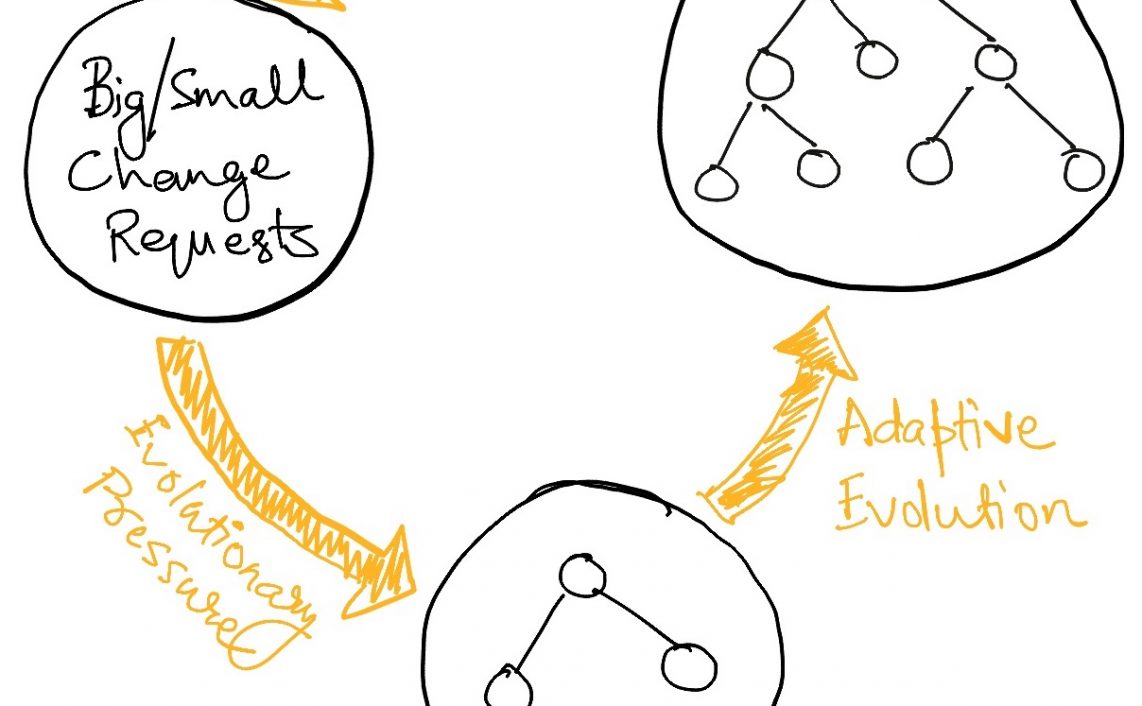

Evolution

- Complex systems can evolve from simple systems only if there are stable intermediate forms.

- In hierarchical systems relationships within each subsystem are denser and stronger than relationships between subsystems. Everything is still connected to everything. If these differential information links within and between each level of the hierarchy are designed right, feedback delays are minimized.

- Hierarchical systems evolve from the bottom up.

- The purpose of the upper layers of the hierarchy is to serve the purposes of the lower layers. This is something, unfortunately, that both the higher and the lower levels of a greatly articulated hierarchy easily can forget. Therefore, many systems are not meeting our goals because of malfunctioning hierarchies.

Nonlinear Relationships

- Systems fool us by presenting themselves—or we fool ourselves by seeing the world—as a series of events.

- It’s endlessly engrossing to take in the world as a series of events, and constantly surprising, because that way of seeing the world has almost no predictive or explanatory value.

- System structure is the source of system behavior. System behavior reveals itself as a series of events over time.

- A linear relationship between two elements in a system can be drawn on a graph with a straight line.

- A nonlinear relationship is one in which the cause does not produce a proportional effect. The relationship between cause and effect can only be drawn with curves or wiggles, not with a straight line.

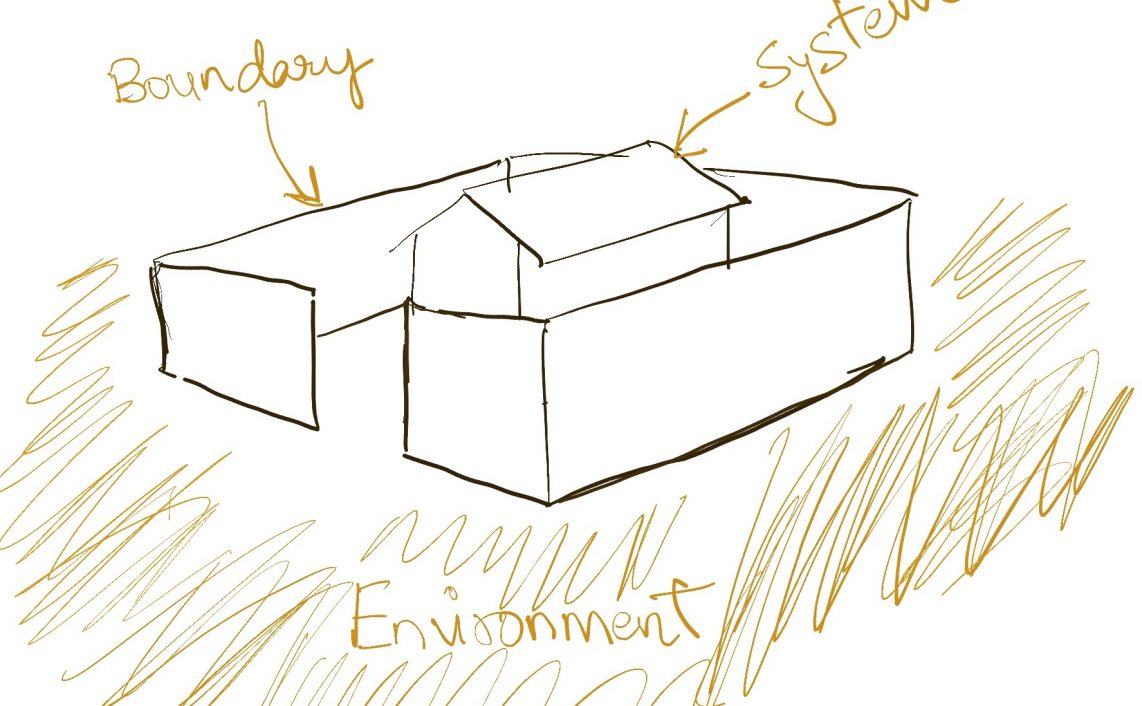

System Boundaries

- The greatest complexities arise exactly at boundaries.

- There are no separate systems. The world is a continuum. Where to draw a boundary around a system depends on the purpose of the discussion—the questions we want to ask.

- The right boundary for thinking about a problem rarely coincides with the boundary of an academic discipline, or with a political boundary.

- Boundaries are of our own making, and that they can and should be reconsidered for each new discussion, problem, or purpose.

Limiting Factors

- At any given time, the input that is most important to a system is the one that is most limiting.

- Insight comes not only from recognizing which factor is limiting, but from seeing that growth itself depletes or enhances limits and therefore changes what is limiting.

- To shift attention from the abundant factors to the next potential limiting factor is to gain real understanding of, and control over, the growth process.

- For any physical entity in a finite environment, perpetual growth is impossible. Ultimately, the choice is not to grow forever but to decide what limits to live within.

Bounded Rationality

- When there are long delays in feedback loops, some sort of foresight is essential. To act only when a problem becomes obvious is to miss an important opportunity to solve the problem.

- Bounded rationality means that people make quite reasonable decisions based on the information they have. But they don’t have perfect information, especially about more distant parts of the system.

System traps

- Policy Resistance

- The most effective way of dealing with policy resistance is to find a way of aligning the various goals of the subsystems, usually by providing an overarching goal that allows all actors to break out of their bounded rationality.

- Harmonization of goals in a system is not always possible. It can be found only by letting go of more narrow goals and considering the long-term welfare of the entire system.

- Tragedy of the commons : Tragedy of the commons comes about when there is escalation, or just simple growth, in a commonly shared, erodable environment.

- Drift to Low Performance : Allowing performance standards to be influenced by past performance, especially if there is a negative bias in perceiving past performance, sets up a reinforcing feedback loop of eroding goals that sets a system drifting toward low performance.

- Escalation : When the state of one stock is determined by trying to surpass the state of another stock—and vice versa—then there is a reinforcing feedback loop carrying the system into an arms race, a wealth race, a smear campaign, escalating loudness, escalating violence. The escalation is exponential and can lead to extremes surprisingly quickly.

- Success to the successful

- This system trap is found whenever the winners of a competition receive, as part of the reward, the means to compete even more effectively in the future.

- If the winners of a competition are systematically rewarded with the means to win again, a reinforcing feedback loop is created by which, if it is allowed to proceed uninhibited, the winners eventually take all, while the losers are eliminated.

- Shifting responsibility to the intervenor : The trap is formed if the intervention, whether by active destruction or simple neglect, undermines the original capacity of the system to maintain itself.

- Rule Beating

- Rule beating is usually a response of the lower levels in a hierarchy to overrigid, deleterious, unworkable, or ill-defined rules from above.

- Rules to govern a system can lead to rule beating—perverse behavior that gives the appearance of obeying the rules or achieving the goals, but that actually distorts the system.

- The way out is to design rules to release creativity not in the direction of beating the rules, but in the direction of achieving the purpose of the rules.

- Chasing the wrong goal

- Systems have a terrible tendency to produce exactly and only what you ask them to produce.

- … confuse effort with result, one of the most common mistakes in designing systems around the wrong goal.

- System behavior is particularly sensitive to the goals of feedback loops. If the goals—the indicators of satisfaction of the rules—are defined inaccurately or incompletely, the system may obediently work to produce a result that is not really intended or wanted.

Identifying and exploiting leverage points

- One of the big mistakes we make is to strip away “emergency” response mechanisms because they aren’t often used and they appear to be costly.. In the long term, we drastically narrow the range of conditions over which the system can survive.

- Democracy works better without the brainwashing power of centralized mass communications. Traditional controls on fishing were sufficient until sonar spotting and drift nets and other technologies made it possible for a few actors to catch the last fish. The power of big industry calls for the power of big government to hold it in check; a global economy makes global regulations necessary.

- The ability to self-organize is the strongest form of system resilience. A system that can evolve can survive almost any change, by changing itself.

- Self-organization is basically a matter of an evolutionary raw material—a highly variable stock of information from which to select possible patterns—and a means for experimentation, for selecting and testing new patterns.

- Encouraging variability and experimentation and diversity means “losing control.”

- Changing the players in the system is a low-level intervention, as long as the players fit into the same old system. The exception to that rule is at the top, where a single player can have the power to change the system’s goal.

- The shared idea in the minds of society, the great big unstated assumptions, constitute that society’s paradigm, or deepest set of beliefs about how the world works. These beliefs are unstated because it is unnecessary to state them—everyone already knows them.

- Paradigms are the sources of systems.

- Paradigms are shared opinions, not facts. There are no ‘true” paradigms.

- Systems modelers say that we change paradigms by building a model of the system, which takes us outside the system and forces us to see it whole.

- If no paradigm is right, you can choose whatever one will help to achieve your purpose.

- Magical leverage points are not easily accessible, even if we know where they are and which direction to push on them.

Advice on working with systems effectively

- Get the Beat of the System : Before you disturb the system in any way, watch how it behaves.

- Expose Your Mental Models to the Light of Day

- Mental flexibility —the willingness to redraw boundaries, to notice that a system has shifted into a new mode, to see how to redesign structure—is a necessity when you live in a world of flexible systems.

- Honour, Respect, and Distribute Information

- Use Language with Care and Enrich It with Systems Concepts : The first step in respecting language is keeping it as concrete, meaningful, and truthful as possible—part of the job of keeping information streams clear. The second step is to enlarge language to make it consistent with our enlarged understanding of systems.

- Pay Attention to What Is Important, Not Just What Is Quantifiable – Pretending that something doesn’t exist if it’s hard to quantify leads to faulty models.

- Make Feedback Policies for Feedback Systems

- Go for the Good of the Whole : Don’t maximize parts of systems or subsystems while ignoring the whole.

- Listen to the Wisdom of the System

- Aid and encourage the forces and structures that help the system run itself.

- Don’t be an unthinking intervenor and destroy the system’s own self-maintenance capacities.

- Locate Responsibility in the System That’s a guideline both for analysis and design. In analysis, it means looking for the ways the system creates its own behavior.

- “Intrinsic responsibility” means that the system is designed to send feedback about the consequences of decision making directly and quickly and compellingly to the decision makers.

- Stay Humble—Stay a Learner

- We can celebrate and encourage self-organization, disorder, variety, and diversity.

- You need to be watching both the short and the long term—the whole system.

- Defy the Disciplines – In spite of what you majored in, or what the textbooks say, or what you think you’re an expert at, follow a system wherever it leads.

- Expand the Boundary of Caring – Most people already know about the interconnections that make moral and practical rules turn out to be the same rules. They just have to bring themselves to believe that which they know.

- Don’t Erode the Goal of Goodness

Read Next : More articles about organizing teams.