Hello everyone!

In this episode of the “For the layman” series, I am going to discuss what the “cloud” is. We’ve all heard of iCloud, AWS, cloud computing etc, and understand the power of their ubiquitous presence. Let’s understand the history and the mechanics of the Cloud a little more.

tl;dr – The Cloud is Uber for computers. It is made up of hundreds of thousands of computers (aka servers) that users can rent out to run their software in exchange for a regular fee – mostly based on usage. Cloud companies take care of running the hardware with all kinds of best practices so that software developers and software development companies don’t have to and can focus on their core work.

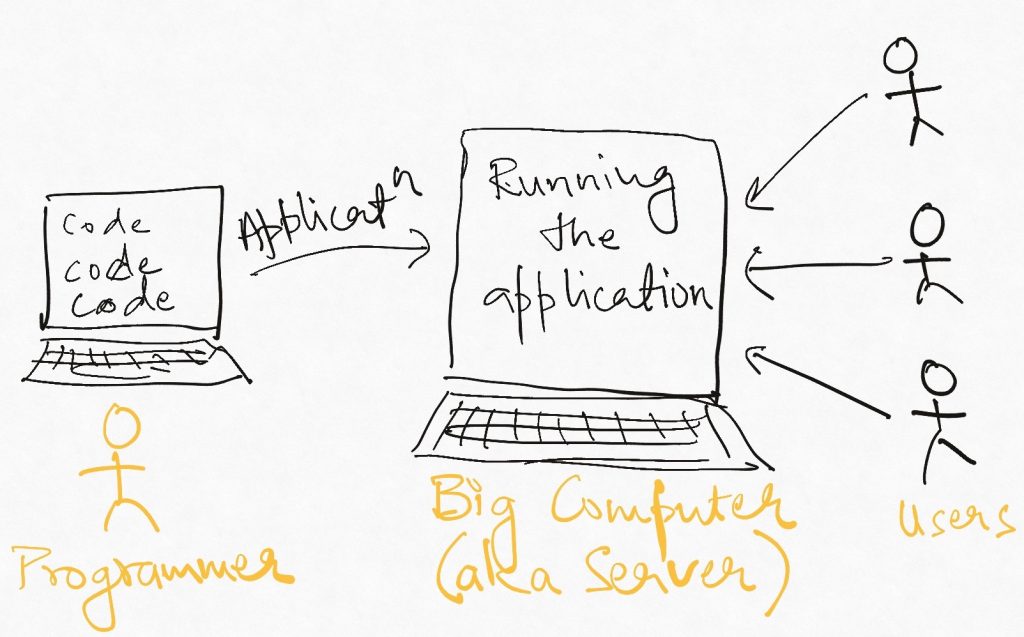

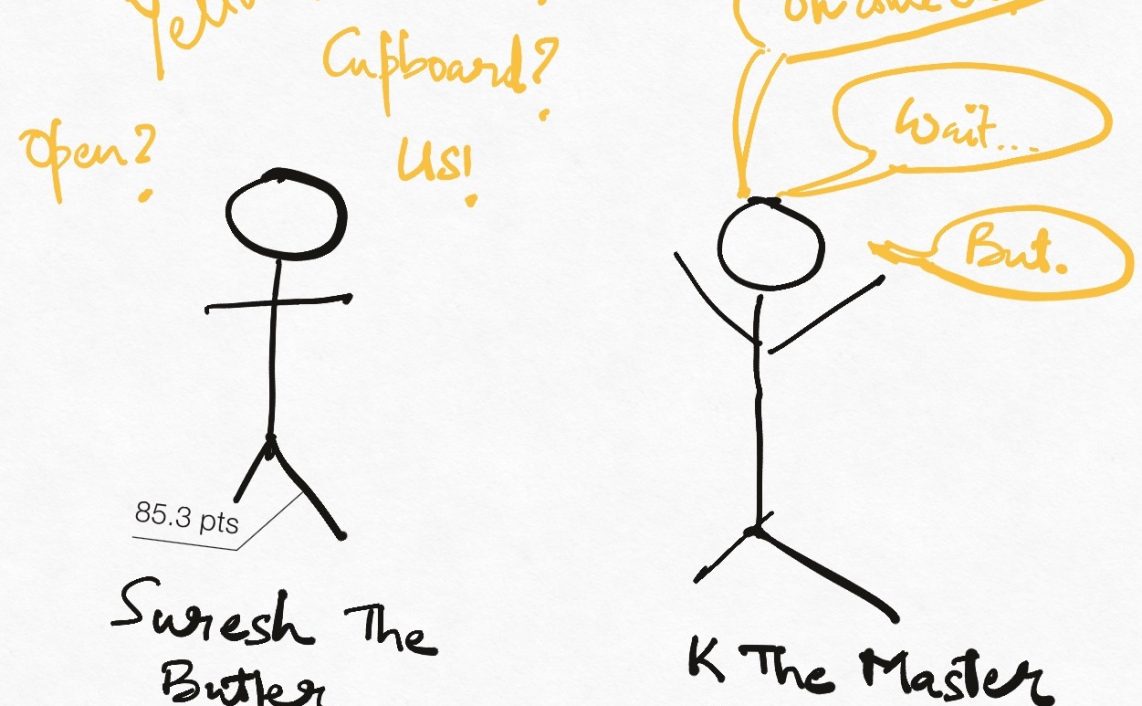

Have you ever seen one of those high intensity surgery scenes in movies where the doctor keeps shouting at the nurses “Give me a clamp” or “Give me 5cc of <insert medicine name>”? For the longest time, software development used to be like (only less high stakes for the most part). Programmers would write awesome applications that could eat the world. But running these awesome apps required big computers. So while the programmer finished writing the last line of code or polishing the last bit of documentation (HAHAHAHA), she would start screaming, “Give me a big computer”.

For many years, this meant going out and buying the computer, plugging it in, installing a bazillion things on it, and forever making sure no one pulled out the plug by mistake. I’m not even getting into the bureaucracy of procuring said computer. As companies started churning out more and more software, this got to be a real problem. With the rise of the cloud, today the application developer could herself set up a new computer with no more than a few clicks of the mouse. No fuss at all. The cloud has commoditized hardware and the operations around it.

To understand the rise of cloud computing, let’s look a little bit at the history of computers and their use.

The first programmable computer was (arguably) the one developed by Alan Turing’s team in Bletchley Park as part of the allied effort at intelligence gathering in WW2. “Programmable Computer” means that this was a machine that could be arranged to do any calculation. Configured one way, it could crack the Nazi ENIGMA code. Set up another way, it could compute the value of Pi to the umpy-umpth decimal.

This is remarkable by itself because most machines now or since do only one thing. A screwdriver, a bulldozer, an airplane, all of them do exactly one thing. Even the “swiss knife” is a collection of many machines, each for one purpose. So this “programmable computer” was a breakthrough in its own right because you could get it to do anything by programming it in different ways.

Anyhow, with a dazzling array of innovations in hardware and computer science, it came to be that computers became commonplace. They could be found in people’s homes, and what we know today as Silicon Valley was beginning to take shape. However, access to computers at the scale at which this new computing revolution wanted it was still not easy. You could easily buy a computer for your home or office, but buying 50 computers was still a pain. Marc Randolph recounts the story from the launch day of Netflix in his book That will never work about how his team were constantly rushing out to buy more computers from a neighbourhood shop because of the unexpectedly large number of people visiting the website.

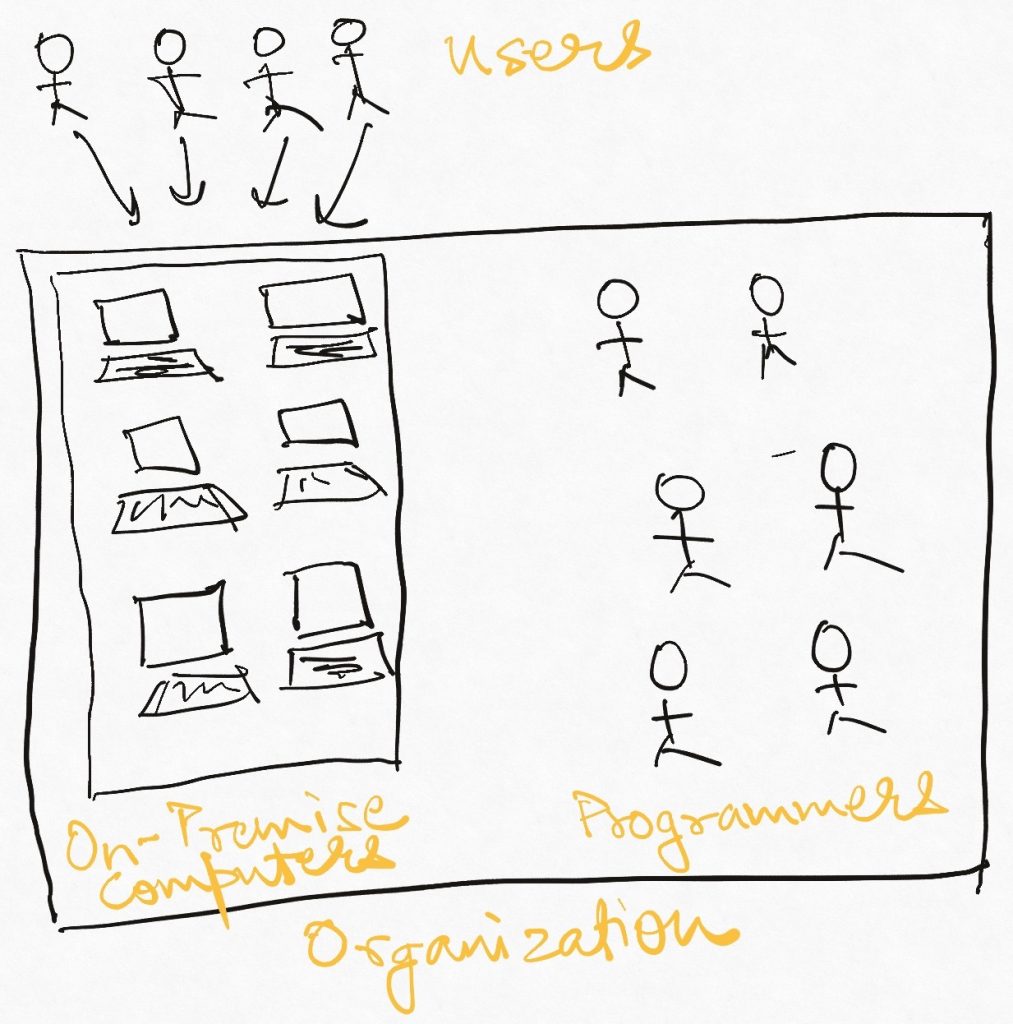

Since this buy-on-demand model did not work very well, software companies started buying and keeping computers in their office (this is still done widely and is called on-premise/on-prem). IT teams would set up the computers, power system, air conditioning, cable layouts, failsafes etc in some designated area in the office. This meant that the company always had a buffer stock of computing power at hand. The cost of this was the capital tied up in all the servers whether being used or not.

And you know what the sneaky software developers did (and have been doing since)? They found out that getting computers wouldn’t be so much of a pain anymore, so they started churning out even more software. Sooo much more of it. Easy supply further fuelled the hunger for computational resources.

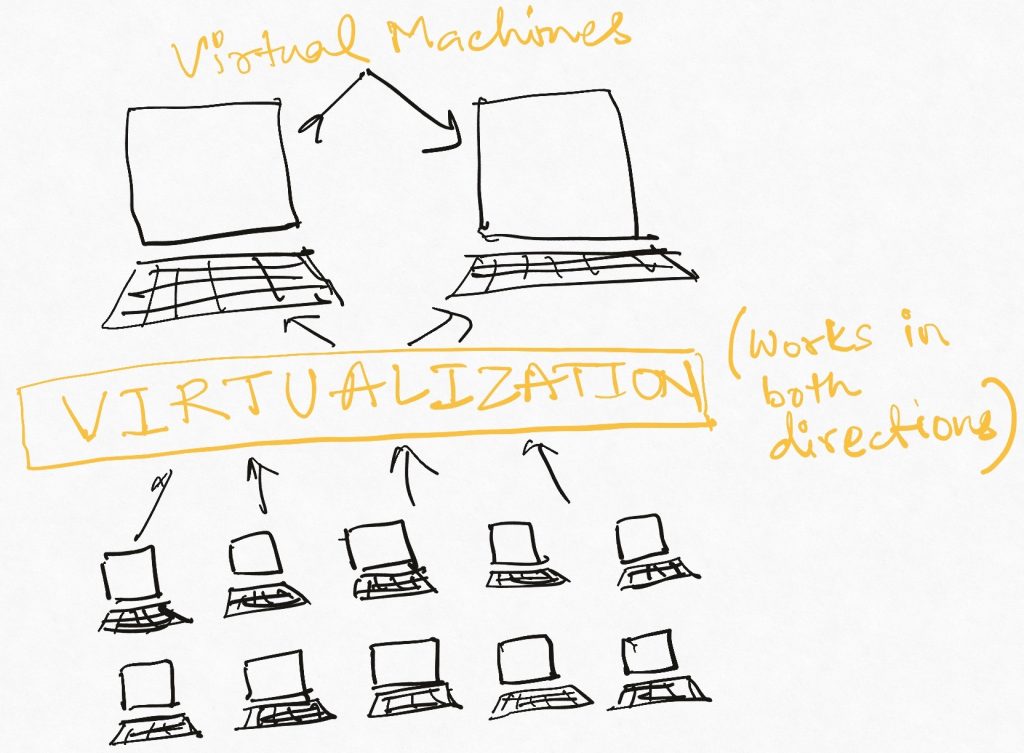

The other problem for companies was that off-the-shelf computers came in certain specific sizes. Roughly speaking, a computer is made up of storage (Hard disk), computing power (CPU cores), memory (RAM) and the network (LAN/Internet). But in the new digital age, all kinds of programs were being written which demanded each of these in different combinations. Some wanted a lot of network but didn’t do much computing (Netflix, Zoom), some stored a lot of data but didn’t have to be fast (data backup systems), and so on.

Most companies couldn’t afford to keep all combinations of such machines at hand. It was too expensive to buy and they would have had to keep a team of engineers for this alone. So some smart folks at IBM, the premier computing company of its time, came up with the idea of Virtualization. Virtualization allowed us to hide multiple computers and all their resources behind a curtain and think in terms of their aggregate resources. E.g. Ten 4 core computers with 8 GB RAM and 256 GB storage each became a collection of 40 cores, 80 GB RAM, and 2560GB of storage which could be parcelled out into “virtual machines” as per the programmer’s requirement. e.g. All of the above mentioned resources could be combined to make 2 huge virtual machines (aka VMs) of 20 cores, 40 GB RAM, and 1280GB storage. Wide adoption of virtualization has since hidden the actual computers (aka bare metal) from the programmers view.

While virtualization gave organization’s huge leeway in terms of deploying computational resources efficiently, it also introduced a huge “Virtualization Layer” to the already complex stack of hundreds, sometimes thousands of computers they were running. Some companies were reaching the stage where they couldn’t house all their servers in their offices and had to open up data-centers (separate facilities for storing and operating all the servers). This was obviously a huge capital investment and distraction for these companies. They didn’t want to be in the business of running hardware, but they had no choice.

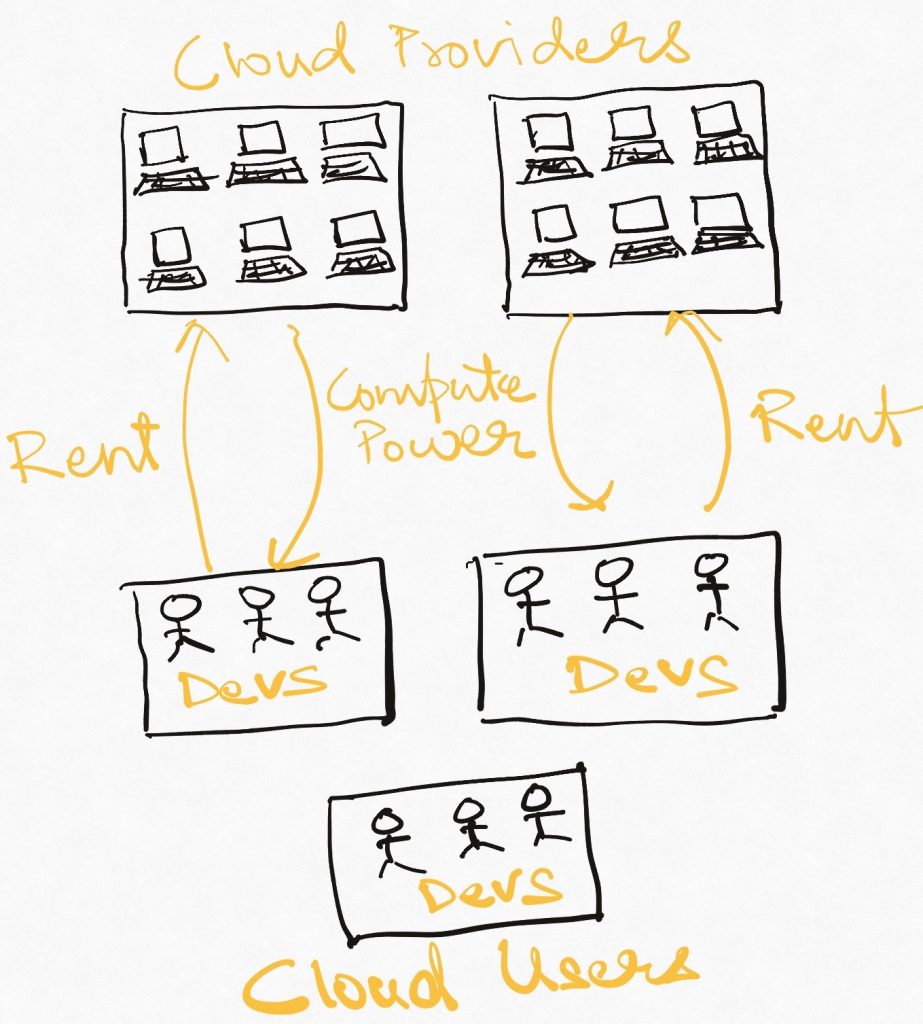

Around the dot com crash of the 90s, some companies (especially Amazon) had a lot of computers and expertise in operating them, and were looking to make some money. They realized that with the internet potentially connecting all computers of the world to each other, they could not only rent out their servers to people who needed to use them, but also sell their expertise in operating them reliably as a service with the hardware. This was the origin of the cloud (or cloud computing or cloud services) as we know it today.

The users of the cloud (the renters of the servers) essentially reach out into the Cloud to lay their hands on mostly Virtual Machines as per their computing needs without worrying about where they kept, which brand they are, and whether they are connected to the power supply or not. Cloud companies like AWS, Azure, GCP, Digital Ocean (where this blog is hosted) take care of all that so we can just focus on running the cool Facemash applications we have written. Users pay an hourly, or monthly, or some other sort of recurring charge based on their usage of these machines. No more scrambling around to buy computers, connect them etc etc. With heavy automation, today even individual developers can manage more computing power than entire teams not too long ago.

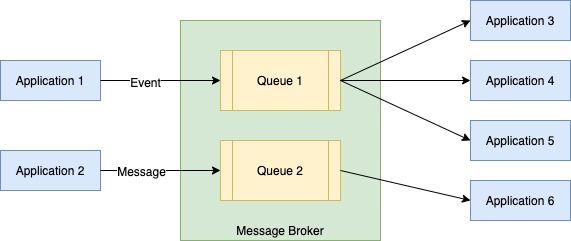

In the last decade, this process of cloudification has gone beyond hardware and even into the realm of software. Most cloud providers have “managed services” under which they provide well set up and maintained installations of the most commonly used software already running. We don’t even have to set up this software now – we just rent a running version of it for our own purposes -as if it was a physical thing. This is a huge blessing for startups since some of the most commonly used types of software like databases, firewalls, caches, message queues are available at only a few clicks notice and don’t have to be installed and operated. The barrier to entry in the software world has never been lower with more and more of the grunt work being moved off to the cloud.

Think of cloud hardware and software in terms of the “distributed car” I had discussed in a previous article. The engine, the wheels, the fuel injection system etc are all in the cloud now, and you can have as many of them as you can pay for. As the car maker, you can now focus on your unique value addition – the branding, the interiors and leather seats, the new electric battery that only you hold the patent to, and so on.

Today, the cloud is part of the internet’s infrastructure like highways or schools for the real world. Most people want highways and schools as a means to an end. They don’t want to have to build them themselves. The cloud plays the same role for anyone that wants to build software. The result – omnipresent software and huge cloud provider bills 🙂

Read Next – More articles on distributed systems.

If you liked this, subscribe to my weekly newsletter It Depends to read about software engineering and technical leadership

Loved the Layman episodes! It was a wonderful article.

Thank you Kaustav.