I mentioned feature toggles a couple of time in one of my recent articles on increasing deployment speed, and they have been a topic of discussion in my team at work, so today I want to dig into them in some detail to see what they are, how they can be used, and what may be the problems of using them.

What are feature toggles

Feature Toggles (also sometimes called Feature Gates) are a kind of configuration used to switch specific features on and off at runtime. By on and off I mean that they control whether or not certain code paths are executed (on) or not (off). They are used by developers when they want to change some existing functionality or introduce new functionality but they want to do it in a controlled manner, rather than having the changes go into effect as soon as the code is deployed.

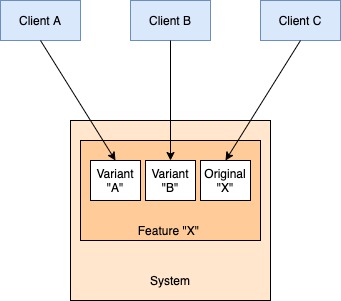

Feature Toggles need not only be on and off, although that is how they are most widely used. Since they are a type of runtime configuration, they can be defined in any manner whatsoever. E.g. They can be used to enable certain new features only for employees (before public rollout) or only in certain geographical locations. In all cases, however, they are a means to switch behaviour i.e. whatever meets the toggle criteria gets the feature, others don’t or get the older behaviour.

Some people have told me that they think of this as the same as A-B testing or canary deployments. Feature Toggles can be used for both of these, but serve a fundamentally different and far more operational purpose. We don’t want to observe behaviour of people in different groups (A-B) or do gradual roll out of new features (Canary). The objective is to be able to deploy code safely and then be able to do targeted damage control by switching off specific features if they cause trouble in production. The inverse of this is to rollback entire deployments, possibly containing multiple features, if any of those features goes bad.

Why use Feature Toggles

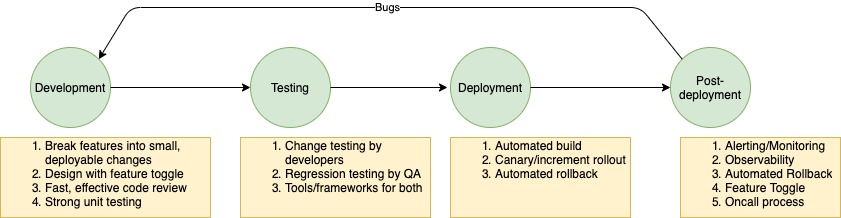

Feature Toggles have risen to greater and greater popularity under the agile school of thought which loves to ship code early and often. The problem with this paradigm is that shipping fast can cause bugs in production when a change is introduced. Feature Toggles are an attempt to solve this problem by decoupling deployment of the code of a change from the actual release/activation of that change.

We put the new change under a feature toggle configuration (which is set to off originally), and then after testing etc merge the code to the deployment branch. This is good for multiple reasons, the primary among which is that our fellow developers get our changes quickly and can use that in their work rather than working on stale code and then having to merge branches with lots of changes conflicting with theirs. This significantly reduces the time spent in integrating other’s code. Code fast – Merge fast – deploy fast – release whenever.

E.g. Let’s say that you optimized a currently inefficient method in the code. It seems to do well in testing environments, but we cannot be sure of the change unless we expose it to full production traffic. We can use a feature gate to conditionally expose this method in production. We deploy the change to production under a feature toggle set to off (Friday night deployment? No problem!). Then when we are good and ready to do so, we set up all monitoring tools and enable the optimization. Now we can monitor system behaviour and in case something bad comes up, we can disable the change and go back to fixing things.

Implementing Feature Toggles

Since Feature Toggles are a form of runtime configuration, they can be managed via any framework that a team uses for this. Below I highlight the three most popular mechanisms in my experience.

Application Configuration Files

The most common of implementing feature toggles is the application configuration file. Most application development frameworks support some version of this (JSON, YML etc), and this is most likely the first place that teams start from. This is a great start, but the problem is that in order to toggle the feature, we have to deploy a code change in these files, which might cause its own set of problems (maybe other changes have already been merged to the deployment branch). It is also a slow process.

Application database

The second best place to do this is to store all feature toggles in the application’s own database and to evaluate the toggle condition by reading from the database. In this model, every application maintains a database for runtime configuration and manages access to this via direct DB access or via some API+UI combination as they see fit and necessary. Of course optimization techniques like caching can be employed here to reduce database load.

Central Configuration Management Service

As some of you might have guessed, the logical next step in this journey is to have a central repository of all dynamic configuration. All applications fetch their configurations from this system (via push or pull model) and typically store the toggles in-memory to reduce the access time.

This is a slightly more complicated architecture but it brings clear benefits beyond the obvious one of having a single system and consistent way of managing toggles across different applications. Since feature toggles and other dynamic configurations can significantly change the behaviour of a system, beyond a certain scale there arises a need to manage and audit changes made to these configurations. A central system can manage such ancillary requirements in one single place, instead of all teams having to deal with them on their own.

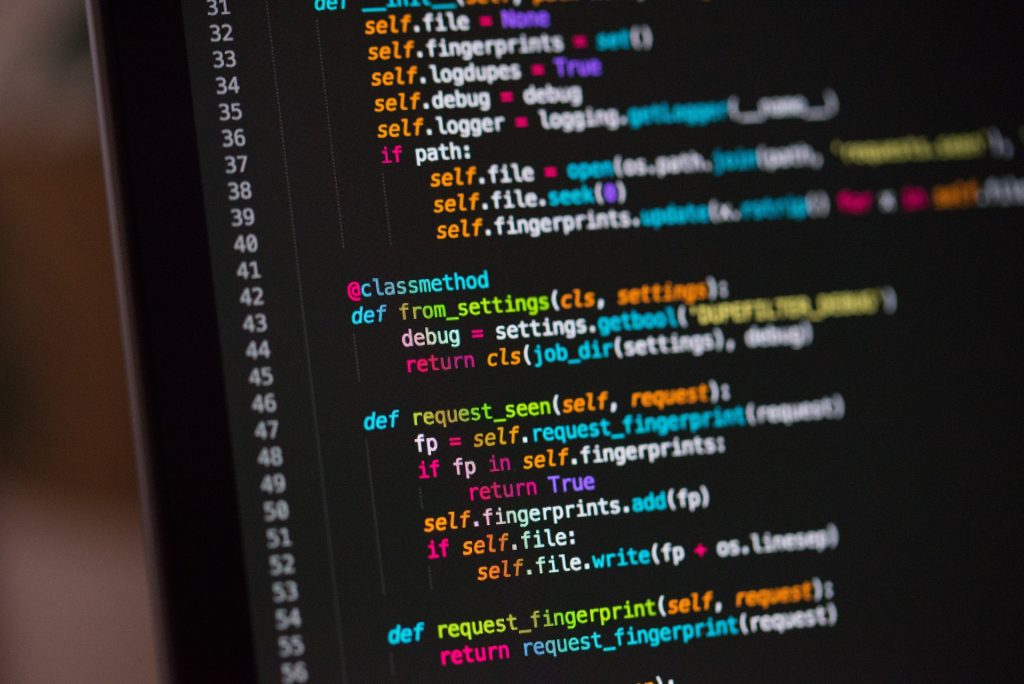

Coding Time

So let’s try coding this thing a little bit. We will stick to the example of having optimized a method in the code but trying to control its release in production.

Originally the code might look something like this.

public void mainBusinessLogic() {

//some business logic here

unoptimizedMethod();

//Some more business logic here

}

private void unoptimizedMethod() {

// Some shitty code here

}

To use feature toggles using whatever method we have for keeping dynamic configuration, the first thing to do is to read the configurations and keep them refreshed at runtime. We can implement a simple thread based mechanism to periodically pull the feature toggle configurations from our data store.

public abstract class ConfigClient {

private static final long REFRESH_INTERVAL_MS = Duration.of(5L, ChronoUnit.MINUTES).toMillis();

private Map<String, String> configurationCache;

private ConfigurationRefresherThread configurationRefresherThread;

public ConfigClient() throws Exception {

// Load the properties one time so that we can fail app startup on any problem.

loadProperties();

// Start thread for periodic reloads after this

ScheduledExecutorService executor = Executors.newSingleThreadScheduledExecutor();

executor.scheduleAtFixedRate(this.configurationRefresherThread, 0, REFRESH_INTERVAL_MS, TimeUnit.MILLISECONDS);

}

public Optional<String> getConfigValue(String property) {

String props = configurationCache.get(property);

return Optional.ofNullable(props);

}

private void loadProperties() {

Map<String, String> propertyMap = getProperties();

if (propertyMap != null && !propertyMap.isEmpty()) {

configurationCache.putAll(propertyMap);

}

}

// Override this method to read from were the configurations are stored

protected abstract Map<String, String> getProperties();

/**

* This thread refreshes the property cache

*/

public class ConfigurationRefresherThread implements Runnable {

@Override

public void run() {

log.debug("Refreshing configurations...");

loadProperties();

}

}

}This class creates a thread called ConfigurationRefresherThread which runs every 5 minutes, loads the data from the configuration store (in the loadProperties method), and stores it into an in-memory map. The actual mechanism for reading the configuration store can be plugged in by extending this class and implementing the getProperties method.

Now we have the feature toggles in memory. The change to use this is a simple if-else in the main business logic.

public void mainBusinessLogic() {

//some business logic here

if (Boolean.valueOf(configClient.getConfigValue("enable-optimized-method").orElse("false"))) {

optimizedMethod();

} else {

unoptimizedMethod();

}

//Some more business logic here

}

private void unoptimizedMethod() {

// Some shitty code here

}

private void optimizedMethod() {

// Some good code here

}To engage the optimized code, we just set the value of the “enable-optimized-method” property in the configuration store to “true”. If something goes wrong, we switch it back to “false”. Either change will reflect in no more than 5 minutes.

The problems

While I cannot recommend the use of Feature Toggles any more strongly, there are certain drawbacks that you need to be aware of when you use them.

Backward compatibility

Since the idea of feature toggles is to, well, toggle between old and new code, you cannot just delete the old stuff and write new stuff, or modify the behaviour in-place. All changes have to be made backward compatible so that in case the new implementation has any problems, we can revert back to the old implementation.

While this kind of code is ideal in principle, in practice it is far simpler to just change the code. You should expect to deal with some tricky situations when doing low level design.

Two failure modes

Similar to the previous point, since code using feature toggles has two modes of running, it also has two modes of failure. Both the toggle-on and toggle-off code paths have to be tested is we actually expect to be able to toggle between the two.

Cleaning up the toggles

If we use feature toggles as a general practice, the toggles can start accumulating in code and things can start looking messy. So it is important that once a change is stabilized, we should go back to the code and remove the toggle we used for it.

This is in a sense the antidote to the two previous problems. Ideally we should not let code exist in toggle-mode for a long time. This will reduce the code complexity, testing effort, and cognitive overhead of understanding that code significantly.

A shortcut to proper modelling

Feature toggles are meant to be short term switches in code paths. If you see some toggles expressing richer system semantics than just on-off (e.g. if toggle values are pending/created/in process/complete), then it means that somewhere our domain modelling is weak and we are trying to plug that gap with ad-hoc configuration values. We should keep an eye out for such scenarios and revisit our system model when we come across them.

If you liked this, subscribe to my weekly newsletter It Depends to read about software engineering and technical leadership

Very insightful. I have a question relating to multi-value configuration that needs to be read in total for the application to work seamlessly. What I mean is, let’s say I have an app which uses library or sub-components A and B. Both have configuration that changes based on feature toggle. Now if I load the new configuration at run time, I need to ensure that both libs/subcomponents etc., are reloading the new config in entirety. I also need to make sure that these components behave reliably and predictably before and after loading the new configuration. This adds complexity if my configuration is large and my components are multithreaded, isn’t it? What would simply in this case?