- Usability

- Scalability

- Maintainability

- Extensibility

- Reliability

- Security

- Portability

- …

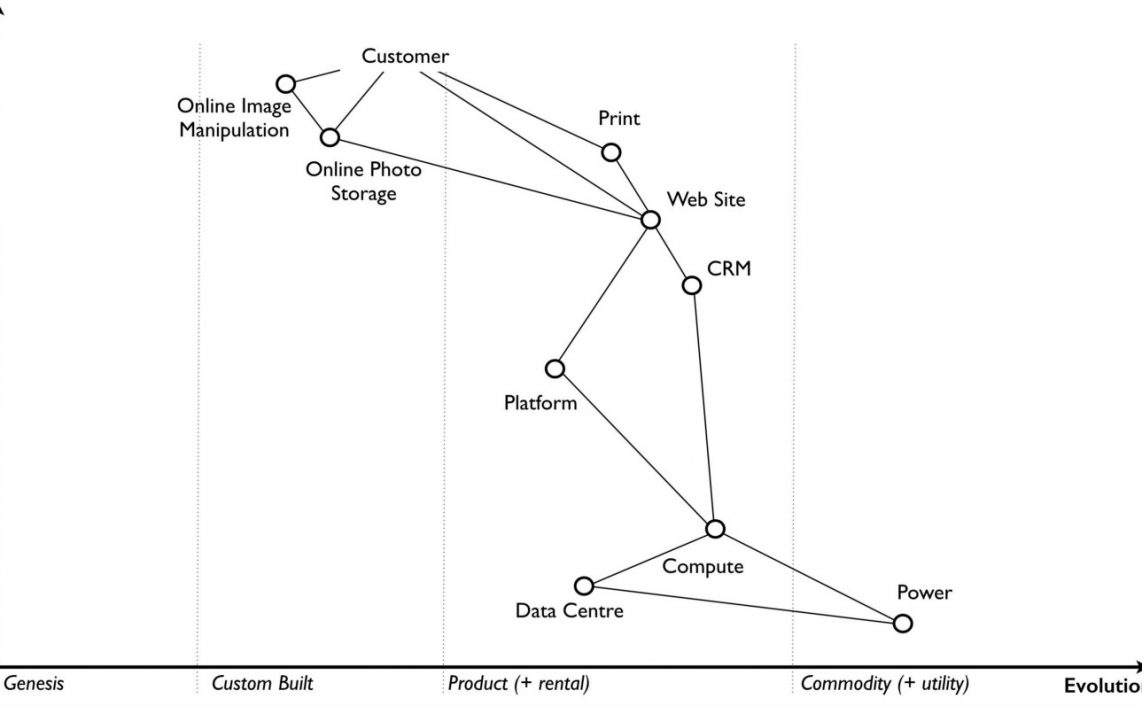

It is often said that software architects deal in “ilities”. There are many “ilities”, all of which are jointly used to describe the attributes good software design should have, a sort of multi-dimensional matrix for evaluating software quality. As is to be expected with all things software, not all of these dimensions are equally important in all scenarios, and architects are often required to make tradeoffs between one or more of them to solve the problem at hand. e.g. one might sacrifice usability for security or portability for scalability. Which one is better “depends” on whether you are building banking software (security) or an API gateway (scalability).

ilities are a great way of analyzing specific components deeply. A single component (or a set of component serving a single purpose) is likely to have very well defined requirements, and we can express this in terms of specific software attributes like maintainability, security etc. This is useful in driving focussed conversations around how this component should be built and evolved.

However, when we design a large system made up of many components, each of which may have different requirements but are still expected to work together (e.g. a large scale microservice architecture), it is difficult to extrapolate from specific technical dimensions and express overall rules that should always be upheld across the org. At such a macro level, we need to define architecture in terms of what business values the most, and there is no way of doing this with ilities. They are a good way of examining specific systems at a very technical level, but they cannot give guidance about how to build large scale system architectures. It is difficult to set down broad level architectural principles using them.

So ilities should be used at the appropriate level of abstraction. They are useful when analyzing a single component or a small, coherent group of components. As long as the components under the scanner have a single purpose, typically we can pick out specific capabilities that best fit the requirements of the component.

As should be clear by now, I find ilities kind of boring – useful but often too straight-laced. I feel that defining high level architecture in these terms cuts the analysis into overly narrow and technical slices and does not take into account the way business value is delivered.

Over time, I have started evaluating software design in a slightly orthogonal way. I have picked out two system characteristics that I “personally” value the most, and these are the rules that I most often run any software design by. I think these two together strike the right balance between delivering value and technical competence in a broad enough way that they can be applied to any system scale. And like all good ilities, they are open to case-by-case interpretation and tradeoff.

Increasingly reduced time to market

Pragmatic Dave Thomas says – “When faced with two or more alternatives that deliver roughly the same value, take the path that makes future change easier” (Check out his talk(s) and blog – extremely funny and insightful).

No matter what beautiful things we might do as software architects, business wants things in production as soon as possible so that money can be made. Eventually, that’s all that matters to the organization. “Move fast and break things” is rightly one of the most famous slogans in startups – deliver as fast as you can, even at some risk of mistakes. This is the part where we focus on the business.

So I think about whether the current architecture will make it easier or harder to ship things in the future. There are specific dimensions along which this can be analyzed:

- What decisions in this architecture are hard to reverse – identify and minimize them.

- Is this feature building towards a platform or is it a one-off. If the latter, can it be split into platform and product so that we can later build on top of this (Build momentum, not velocity)

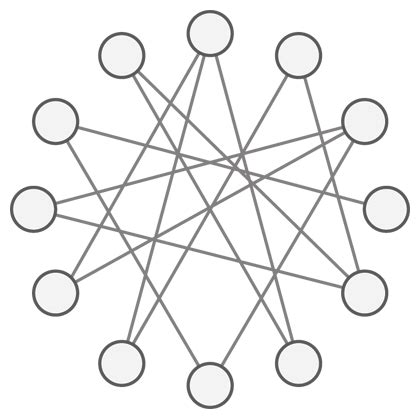

- Does this increase communication between teams – more comms -> more execution alignment required -> slower delivery speed. Would this require some sort of transfer of responsibility across teams or reorg. This aspect is always a little political/sensitive in nature.

- Does the design have good abstractions behind which we can change things or are we leaking details leading to coupling – the latter obviously makes future change harder.

Resilience

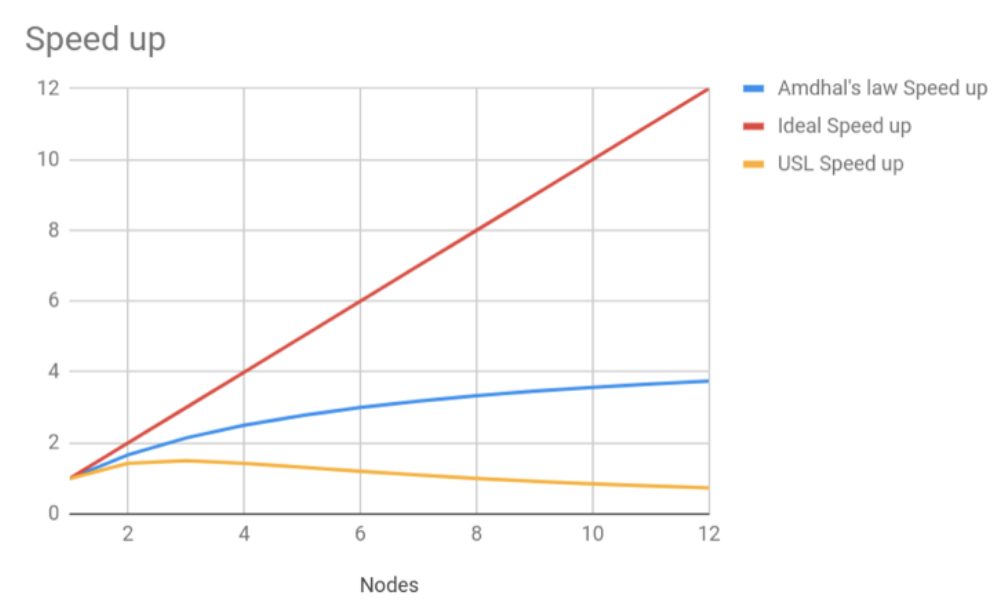

I cheated a little bit here – Resilience is often considered one of the ilities. I like “Move fast and break things”. “Move fast and break everything” – not so much. So while we give a lot of thought to delivering rapid changes to the system, we also need to think very carefully about how do we contain problems that will inevitably be introduced as we get faster and faster.

There are two things to look out for here. One is to ensure that all failures are contained, and the other is that failures are detected quickly.

Failure Containment

- Can this system take down adjacent systems is load on it spikes?

- Can adjacent systems take this system down if they get a load spike?

- How will adjacent systems function if this system goes down and vice-versa? Are there fallbacks and ways to recover?

- What will customers not be able to do during the outage?

As you can see, all of this is familiar technical territory for software builders.

Another thing to note is that I’m not so concerned about “why” a system would fail, just with what would happen when it fails. Because no matter what we do, a system will fail at some point – bad code, infrastructure issues, unexpected load, neglect over time – the list of potential causes is endless and pretty much none of them is completely avoidable. So at a high level, I like to focus on defining what happens when things fail rather than explicitly preventing failure itself.

Failure Detection

The second step is building observability into the systems to quickly detect and root cause cases that we failed to anticipate. Microservice architectures especially are complex system and the whole is more than the some of parts. Charity Majors has written much about observability that I can never hope to match, so i will just point you towards her for the specifics, but the basic idea is to enough sufficient instrumentation of the system such that we know very quickly what has broken and where/why.

I’d love to hear what you think of this broader architecture evaluation rubrik (at least for the large scale architectures) as compared to specifically using “ilities”. Please leave your comments below and as always, if you like what you read, subscribe to the mailing list for more good stuff.