Distributed system design is a hard problem, made all the worse because the design process gives no direct feedback. Problems stemming from faulty design often show up as scalability problems, resilience problems or data issues. However, solving those problems is often the equivalent of addressing the symptoms and not the disease – we may be able to patch up the system enough to keep it running, but the underlying design issues remain and can be triggered again under different circumstances. It takes a lot of effort, not to mention organizational wrangling, to be able to analyze design related root causes when the system is failing in production.

As with an earlier post on code reviews for distributed systems, this article is a simplistic checklist of things that I look out for when reviewing design of a distributed system functionality (anything which requires multiple systems to work together).

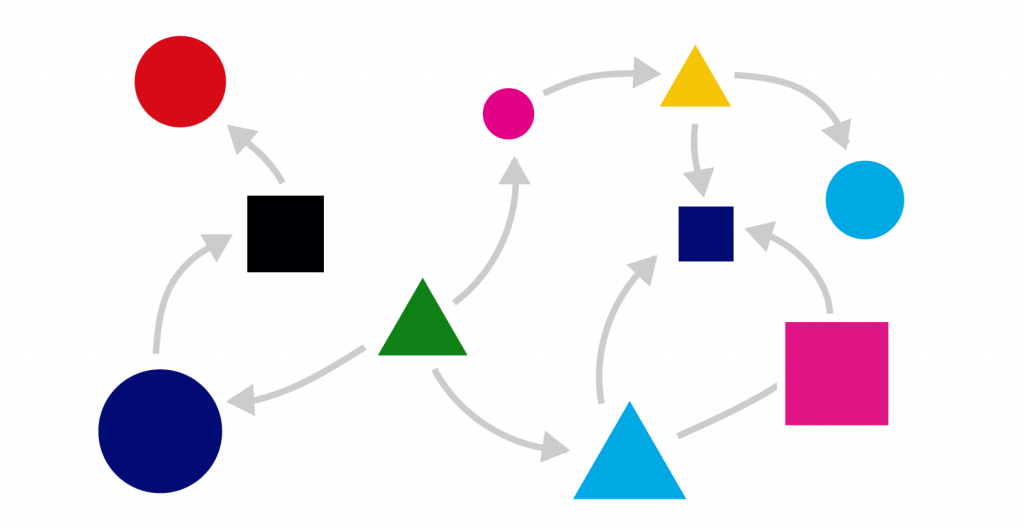

I think of distributed design issues in terms of three buckets : Consistency-vs-Availability, Domain Coupling, and Observability. The first two often leak into each other because a distributed system is like a complex mesh and each design choice impacts multiple other things. Each bucket is a huge huge topic in its own right, so the following guidelines represent a minimum level of scrutiny that I believe should be imposed on any design.

Depending on the use-case and context of the problem, you should go far deeper into specific aspects after these basics have been checked off. Conversely, if there’s a problem with these, be extremely cautious.

Consistency or Availability

I should remind you upfront that when I say “system” in this article, I mean a collection of independent systems which collaborate in different ways to deliver the final user experience. And when we talk about consistency versus availability, we are talking about all the involved systems.

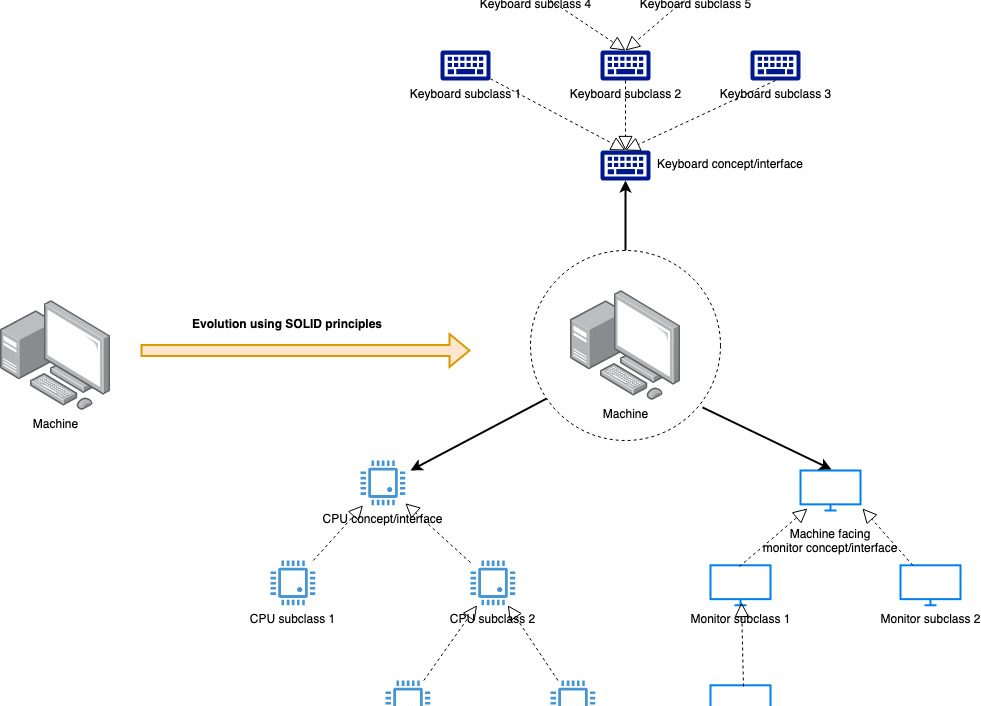

CAP Theorem tells us that we can choose any two out of consistency, availability, and partition tolerance for our system. However, network partitions are a fact of life, hence the true choice of CAP theorem is between consistency and availability – we can have an “AP” system (available, aka continues to work under partition) or a “CP” system (fails if all involved components are not alive and well).

A fundamental rule of software architecture is that all software fails. So let’s say that we need consistency across three components for a feature to work as designed. This makes the feature brittle because if any one of the components is down, the feature does not work. We now have the dreaded “Single Point of Failure” – in fact we have three of them!!! The more we try to make the entire system consistent, the more we make it susceptible to failure at the slightest glitch. The more components that must stay in sync, the worse this gets.

Fortunately, there is a way out.

When looking at individual systems, CP and AP can be binary choices (e.g. MySQL is consistent, Cassandra is not), but when looking at a distributed system, they can start taking on shades of grey. Each component may be consistent (order, inventory and payment), but the overall system can still be designed to be eventually consistent. This gives us the necessary leeway to add some availability to our system. So the main guideline here is to design the OVERALL system for availability, such that the complete functionality can be achieved over time despite individual subsystems being intermittently unavailable.

Use asynchronous message passing for communication

This is the most powerful weapon we have for taking off the pressure of consistency in favour of availability and features as a major guideline in the Reactive Manifesto. Consider making the communication between components asynchronous (message passing over a message broker) instead of making it a request-response style API call. If synchronous communication is the crack cocaine of Silicon Valley, synchronous API calls are the crack cocaine of the distributed system design. Consider this – if two systems don’t have to be consistent, then why should we do anything immediately (as the synchronous communication model demands). A request-response model creates a form of temporal coupling (“serve this request right now!”) between the caller and callee, which causes the former to fail if the latter is unavailable at a time, which can then cause cascading failures in its callers and so on. Asynchronous communication allows the called system to process requests at its own pace, thereby taking off the pressure of availability.

While asynchronous messaging is a powerful tool, there are several things that MUST be kept in mind when adopting it.

- Define the minimal acceptable user experience – For every end-user experience, define the absolute minimal consistent experience. E.g. If a user wins an online game, must we credit bonus points, award him a new position on the leaderboard, notify all his friends, and send him a notification in an all-or-nothing manner? As should be obvious, the more we can agree to do outside the core, consistent experience, the less likely it is that we will encounter system failure. We must be ruthless about this when discussing requirements and do only the minimum needed to support that – everything else should be done asynchronously.

- Explicitly guarantee eventual consistency : A single user action or client request can modify data across multiple components, and the design should guarantee that all these systems will come into consensus with some specified time – even if it is via a distributed rollback.

- Guarantee SLA for consistency systemically : This point is worth calling out all by itself. We may have a plan for eventual consistency, but without the mechanism for setting and enforcing a set time frame on it, it is impossible to detect failure from slow processing. Since we cannot determine whether an event threw an error during processing or whether it got dropped in the network, an explicit hard bound in time is necessary to maintain the eventual consistency guarantee.

Domain Coupling

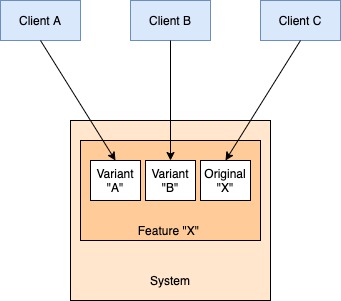

Good distributed system design essentially hinges on separating distinct things from each other at the right level of abstraction. This line of separation is called the domain boundary and is identified by a unique language of communication and interfaces for functionality unique to that domain. E.g. A message broker domain encapsulates messages, delivery guarantees, storage media etc. Payments domain encapsulates transactions, payment gateways etc.

While this broadly maps to the concept of bounded-context in microservice language, the idea is applicable broadly and recursively. E.g. Payments can be a domain, inside which payment gateways and transactions can be sub-domains, and so on.

The main idea of designing a distributed system in terms of domain is in terms of decoupling domains at the same level, while building cohesion at the level of their parent domain (if any).e.g. In the example above, we would try to keep payment gateways and transactions as decoupled from each other as possible in terms of implementation, but being part of the same domain should dictate a certain consistency in terminology and data models.

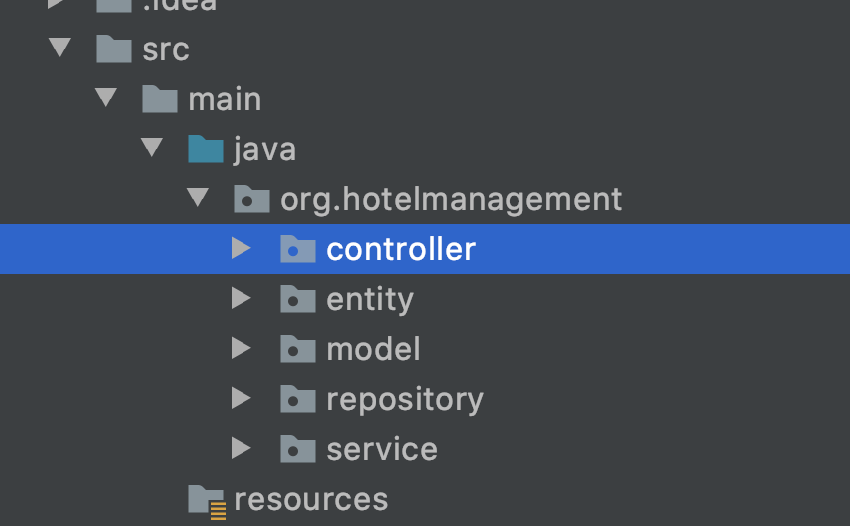

- Create domain boundaries : With the huge uptick in adoption of microservices, it becomes very important to identify the domain of each component in a manner decoupled from the underlying technical implementation. It is important to identify which components belong to which domain and how external systems talk to these systems. We might use multiple services to handle a shipment (tracking service, scanning service, audit service etc), but external users should work with a cohesive “logistics” domain entity and API. A very good tool for building domain boundaries are API gateways which can abstract the internal details of a domain behind higher order APIs.

- Use standard domain language to communicate between systems : Chatter between two components should be in terms of existing entities + their states + actions possible on them instead of some newly created constructs which belong to neither domain. We can use constructs from either side of the communication for this, depending on whether we want events or messages. If you find that communicating between two components requires creation of some special language, it “might” mean that there is some problem in the way these two components are separated or perhaps we are missing another component that should exist between these two.

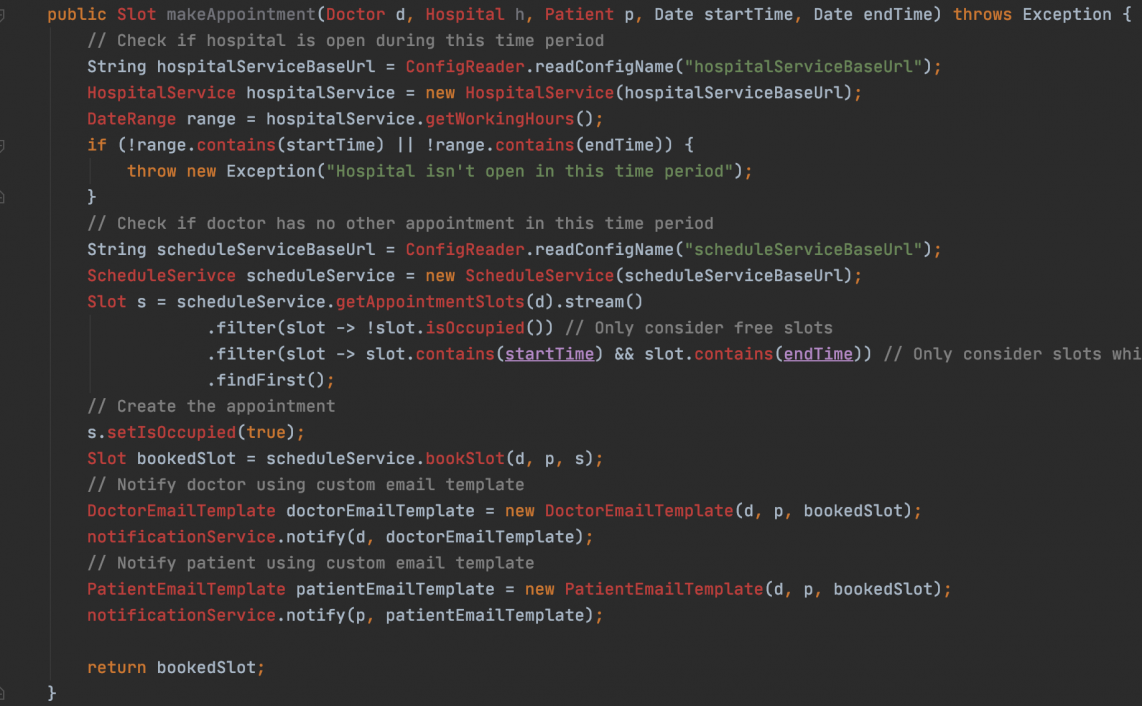

- Separate multi-domain/multi-component actions into workflows – A very common way domain coupling occurs is when one component starts taking end-to-end control of a multi-component workflow. This means that this one domain now knows about various other domains, their behaviour, and the nature of a “workflow” outside of its own boundary. This awareness makes the component coupled to the existing workflows and hence difficult to evolve.

- If our features invoke multiple components, we should separate this orchestration out of the core services modelling the domain into stateful orchestration components. This can be dedicated orchestrating services or some sort of BPM systems. Statefulness means that we get benefits like retries, error reports, SLA etc from one place.

- Using choreography to build workflows is also a viable option if we cannot use explicit orchestration for everything, but we should use some way of tracking task completion SLA to reduce the brittleness for long-lived workflows.

- Model business processes across components : A corollary to the above point is that we should model businesses processes end-to-end regardless of technical boundaries. Since we are already decoupling domains by offloading orchestration to workflows, it doesn’t make sense to build workflows within narrow team boundaries. We should make the workflows encompass as much of the business process as a whole as we can – this will build central repositories of business knowledge and provide deep visibility into the state of operations.

- Prefer events over messages : A further component on decoupling domains is to prefer the use of pub-sub style events rather than targeted messages. While this is contingent on many other factors, it further enhances agnosticity towards other domains because the publisher doesn’t care about consumers in the pub-sub model, hence coupling between publisher and consumer domain is eliminated.

Observability

In the words of Charity Majors, Observability is the ability to answer new questions about a system without having to peek inside it. I think of observability in two flavours : technical and business. We should be able to explain the technical state of the system, and we should be able to determine if it is doing what it is supposed to do from a business metrics perspective.

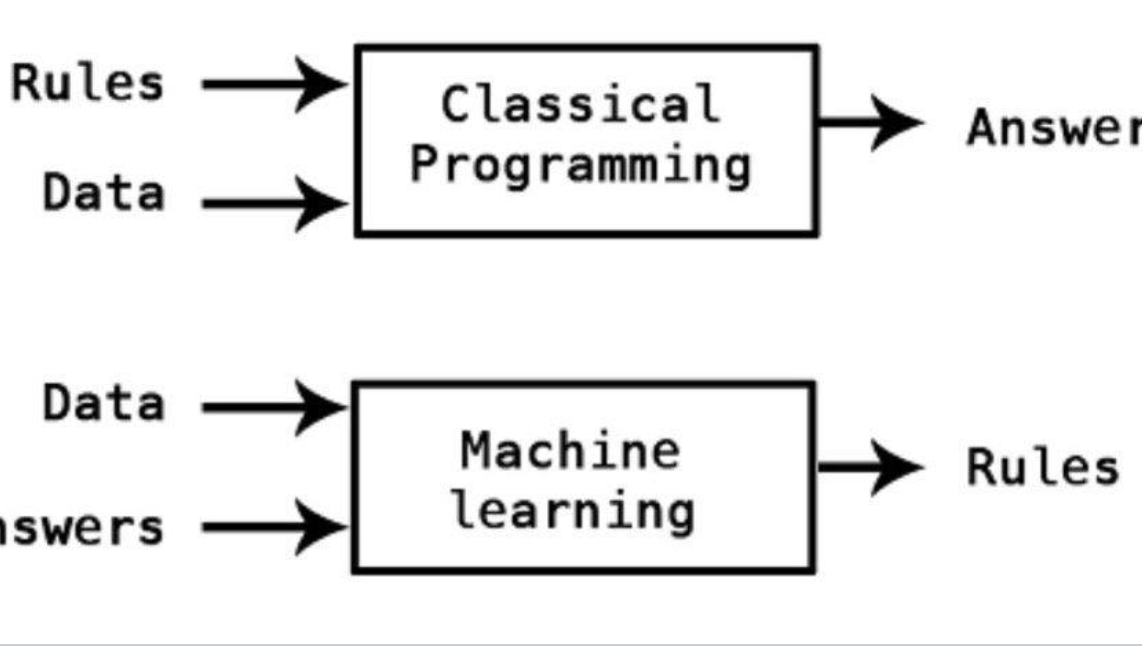

- Use event data to build metrics : The basic unit of work inside any system is an event of some sort occurring explained in the system’s own domain language. The event can be of any type (e.g. ORDER_CREATED, REQUEST_RECEIVED, ERROR_RESPONSE_RETURNED). An idea worth pursuing is that instead of the common logs-traces-metrics approach, we consider the entire system information being modelled and emitted as events and stored outside in an analytical database for deriving intelligence. The benefit of having raw event data is that in sync with the domain modelling approach (something happened), and raw data can be used to derive new metrics at any time. This is way better than trawling through some combination of unstructured text logs, spans, traces, and arbitrary Prometheus/Statsd type metrics.

- Store raw data in a central place : The only way to honour the definition of Observability given above is to store raw data from systems because the moment we start dealing only in pre-defined metrics, we are bound to them and lose the ability to answer any “new” questions. One common argument against raw data is volume, but there are ways to mitigate that (sampling etc.), while the ability to diagnose your systems in face of new failure modes is priceless. And since the overall system is distributed, having the observability data also in distributed silos will be much less powerful than bringing all of it together to derive holistic insights.

General Guidelines

- Use asynchronous frameworks for implementation – I have written before about how we can use asynchronous programming to scale our systems significantly. So when invoking a remote system, we should ensure that we are doing it in an asynchronous manner so as to not block application threads. This is a bit of early implementation/design choice and if your application framework does not allow this or the base framework of the application is not designed for this then you are out of luck. If you have the option of going asynchronous, always take it – your system and your team will thank you for it when that unexpected spurt of traffic comes.

- Know your callers – By definition, no one is in charge in a distributed system. As a result, we should take as many precautions for internal systems as we might take for external systems. The least of these is to enforce rate limits if you can, but at least know your callers. This will help isolate the origin of trouble when things go wrong – identifying sources of tracking using IP addresses when the system is on fire is not fun.

- Know when to fail – I have spoken a lot about how to make our systems more available under varying conditions, but there are situations when failing is better than doing the wrong thing (Someday I will write an article about how our message passing based design resulted in way more orders than we had inventory for). Consistency is a perfectly acceptable choice in many scenarios, and we should be careful to not overcompensate against them.

I hope you find these guidelines useful in reducing the most commonly found issues in distributed systems design. I would to hear if you have some other considerations that you find simple to apply but very effective – we can add them here!