This is my breakdown and summary of this fantastic 2012 article by Eric Brewer on InfoQ. Although it is 8 years old, it shines a powerful light on many of the issues we are still struggling with in context of micro-service architectures like cross-service transactions, cascading failures and user experience management in distributed systems.

I have written about design considerations in distributed systems before, and this article does a far better job of highlighting the consistency versus availability concerns that I could possibly do. Especially notable is how as an industry we have moved towards consistency and availability trade-off at various levels of system architecture instead of perceiving an up-front, all-in choice of consistency and availability.

This is a direct result of improved practical understanding of working with the constraints of CAP Theorem. We now think more in terms of degrees of CAP rather than binary C-vs-A. Now we focus more on recovery from partition rather picking consistency or availability up front. Hence the emphasis is on being both consistent AND available till the time we must choose one.

A network partition is not something the complete distributed System agrees upon all at once. One node may find itself with another node while the rest of the network sees no such issue. Hence the question of whether a partition has occurred is very local. Hence we need more fine grained strategy to identify and deal with partitions.

The question of partition is a local decision based on latency of communication. If the latency is higher than acceptable, then we are in partition for all practical purposes. This also happens only intermittently, so not all out system choices should be made around this low probability event.

Older architectures would make the C-vs-A choice at this point. However, we now understand that we also have the option of going into “partition mode” where we run the the system in such a way that allows at least limited availability along with the possibility of resolving differences between both sides of the partition after the partition is over.

- Allow some operations and disallow others. Which operations to allow is determined by the invariants of the system, whether they MUST hold at all times, and how can we reconcile the differences if they are not mandatory to be enforced at all times. e.g. Allow credit (harmless) but not debit (dangerous).

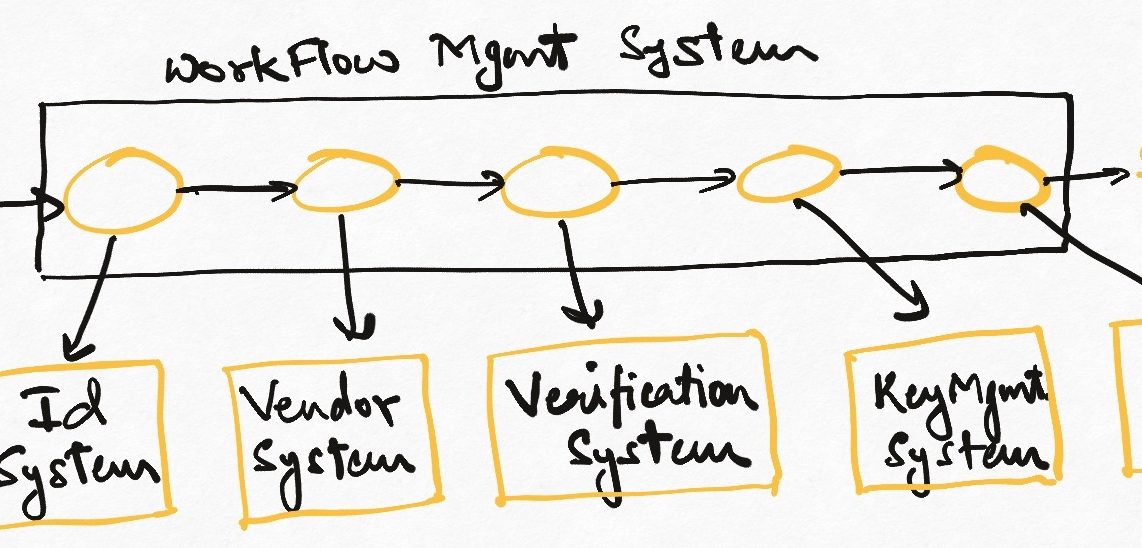

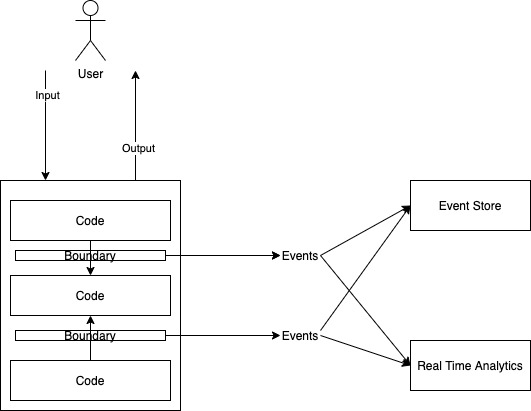

- We can store metadata about events on both sides before we execute those events so that we can serialize the intent once the partition is over. This is especially important when the outcomes are externalized. i.e. in the realm of other system (like debiting a credit card). This is very similar to event sourcing.

- We can use data structures like CRDTs to reconcile system state after partition.

- The best situation is to use commutative operations – operations that can be squashed into a single commit log ordered along their timelines to generate the final, resolved system state. e.g. the Saga pattern in distributed transactions.

The following are the highlights of the article which provide more detail into the arguments and insights. These are just to give the essence, the entire article is an absolute must read.

- The CAP Theorem asserts that any networked shared-data system can have only two of three desirable properties – Consistency, Availability, and Partition Tolerance.

- The theorem first appeared in fall 1998. It was published in 1993 and in the keynote address at the 2000 Symposium on Principles of Distributed Computing, which led to its proof.

- The easiest way to understand CAP is to think of two nodes on opposite sides of a network partition.

- Allowing at least one node to update state will cause the nodes to become inconsistent, thus forfeiting C.

- Likewise, if the choice is to preserve consistency, one side of the partition must act as if it is unavailable, thus forfeiting A.

- Only when nodes communicate is it possible to preserve both Consistency and Availability, thereby forfeiting Partition Tolerance.

- For wide-area systems, designers cannot forfeit P and therefore have a difficult choice between C and A.

- The “2 of 3” formulation was always misleading because it tended to oversimplify the tensions among properties.

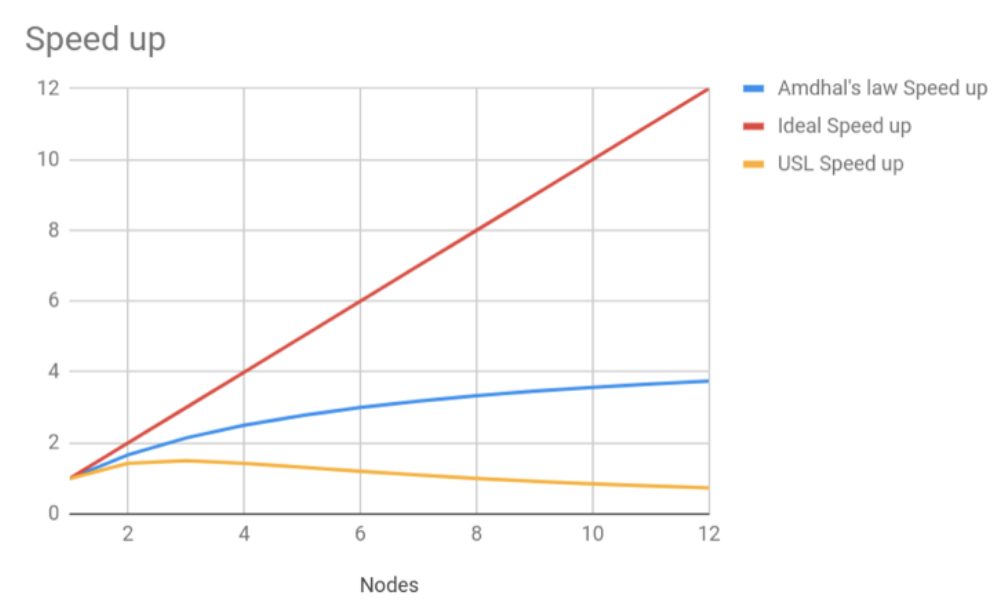

- First, because partitions are rare, there is little reason to forfeit C or A when the system is not partitioned

- The choice between C and A can occur many times within the same system at very fine granularity; not only can subsystems make different choices, but the choice can change according to the operation or even the specific data or user involved

- All three properties are more continuous than binary.

- The modern CAP goal should be to maximize combinations of consistency and availability that make sense for the specific application. Such an approach incorporates plans for operation during a partition and for recovery afterward.

- BASE : Basically Available, Soft state, Eventually consistent.

- ACID : Atomicity, Consistency, Isolation, Durability

- ACID:

- In ACID, the C means that a transaction pre-serves all the database rules, such as unique keys. In contrast, the C in CAP refers only to singlecopy consistency, a strict subset of ACID consistency.

- if a system requires ACID isolation, it can operate on at most one side during a partition because Serializability requires communication in general and thus fails across partitions.

- Operationally, the essence of CAP takes place during a timeout, a period when the program must make a fundamental decision-the partition decision:

- cancel the operation and thus decrease availability, or

- proceed with the operation and thus risk inconsistency.

- Retrying communication to achieve consistency…just delays the decision…retrying communication indefinitely is in essence choosing C over A.

- a partition is a time bound on communication. Failing to achieve consistency within the time bound implies a partition and thus a choice between C and A for this operation.

- there is no global notion of a partition, since some nodes might detect a partition, and others might not.

- Nodes can detect a partition and enter a “partition mode”.

- designers can set time bounds intentionally according to target response times; systems with tighter bounds will likely enter partition mode more often and at times when the network is merely slow and not actually partitioned

- Aspects of the CAP theorem are often misunderstood, particularly the scope of availability and consistency

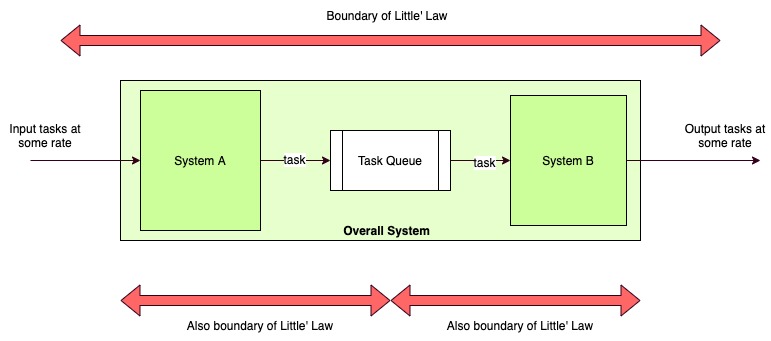

- Scope of consistency reflects the idea that, within some boundary, state is consistent, but outside that boundary all bets are off.

- Independent, self-consistent subsets can make forward progress while partitioned, although it is not possible to ensure global invariants.

- Conversely, if the relevant state is split across a partition or global invariants are necessary, then at best only one side can make progress and at worst no progress is possible

- real systems lose both C and A under some sets of faults, so all three properties are a matter of degree

- given the high latency across the wide area, it is relatively common to forfeit perfect consistency across the wide area for better performance.

- Another aspect of CAP confusion is the hidden cost of forfeiting consistency, which is the need to know the system’s invariants. The subtle beauty of a consistent system is that the invariants tend to hold even when the designer does not know what they are.

- Conversely, when designers choose A, which requires restoring invariants after a partition, they must be explicit about all the invariants, which is both challenging and prone to error.

- Managing Partitions

- Normal operation is a sequence of atomic operations, and thus partitions always start between operations. Once the system times out, it detects a partition, and the detecting side enters partition mode.

- Once the system enters partition mode, two strategies are possible. The first is to limit some operations, thereby reducing availability. The second is to record extra information about the operations that will be helpful during partition recovery.

- Which operations should proceed?

- Given a set of invariants, the designer must decide whether or not to maintain a particular invariant during partition mode or risk violating it with the intent of restoring it during recovery.

- For an invariant that must be maintained during a partition, however, the designer must prohibit or modify operations that might violate it. (In general, there is no way to tell if the operation will actually violate the invariant, since the state of the other side is not knowable.)

- Externalized events, such as charging a credit card, often work this way. In this case, the strategy is to record the intent and execute it after the recovery.

- Partition mode gives rise to a fundamental user-interface challenge, which is to communicate that tasks are in progress but not complete.

- Partition Recovery

- The designer must solve two hard problems during recovery:

- the state on both sides must become consistent, and

- there must be compensation for the mistakes made during partition mode.

- It is generally easier to fix the current state by starting from the state at the time of the partition and rolling forward both sets of operations in some manner, maintaining consistent state along the way.

- Most systems cannot always merge conflicts.

- Conversely, some systems can always merge conflicts by choosing certain operations to be admissible during partition

- Using commutative operations is the closest approach to a general framework for automatic state convergence. The system concatenates logs, sorts them into some order, and then executes them.

- Unfortunately, using only commutative operations is harder than it appears; for example, addition is commutative, but addition with a bounds check is not (a zero balance, for example).

- commutative replicated data types (CRDTs) – a class of data structures that provably converge after a partition

- Compensating for mistakes

- Typically, the system discovers the (invariant) violation during recovery and must implement any fix at that time

- There are various ways to fix the invariants

- trivial ways such as “last writer wins” (which ignores some updates)

- smarter approaches that merge operation

- human escalation

- Recovering from externalized mistakes typically requires some history about externalized outputs.

- Long-running transactions face a variation of the partition decision: is it better to hold locks for a long time to ensure consistency, or release them early and expose uncommitted data to other transactions but allow higher concurrency?

- Serializing this transaction in the normal way locks all records and prevents concurrency.

- Compensating transactions take a different approach by breaking the large transaction into a saga, which consists of multiple sub-transactions, each of which commits along the way

- The designer must solve two hard problems during recovery:

- ATM design : Consistency or Availability?

- Strong consistency would appear to be the logical choice, but in practice, A trumps C. The reason is straightforward enough: higher availability means higher revenue

- The key invariant is that the balance should be zero or higher. Because only withdraw can violate the invariant, it will need special treatment, but the other two operations can always execute.

- Under partition, modern ATMs limit the net withdrawal to at most k, where k might be $200.

- When the partition ends…Restoring state is easy because the operations are commutative, but compensation can take several forms (overdraft fee, legal action)

Read Next – Understanding distributed system as data pipelines

If you liked this, subscribe to my weekly newsletter It Depends to read about software engineering and technical leadership